This blog describes on - Diagnosing the Scheduler Timeout Error

When you run some huge bigsql queries or running many concurrent queries, sometimes you may get scheduler error. But when you rerun it, it will return the results.

In the db2diag log, you will see the error

2018-10-22-15.54.09.353224+540 I56948911015 LEVEL: Error

PID : 29917 TID : 70312999533568 PROC : db2sysc 0

INSTANCE: bigsql NODE : 000 DB : BIGSQL

APPHDL : 0-2278 APPID: *1.bigsql.199921064012

AUTHID : BIGSQL HOSTNAME: testcluster234.ibm.com

EDUID : 228 EDUNAME: db2agent (BIGSQL) 0

FUNCTION: DB2 UDB, base sys utilities, sqeBigSqlSchedulerInternal::registerQuery, probe:2690

MESSAGE : ZRC=0xFFFFEBB1=-5199

SQL5199N The statement failed because a connection to a Hadoop I/O

component could not be established or maintained. Hadoop I/O

component name: "". Reason code: "". Database partition number: "".

DATA #1 : String, 140 bytes

Transport Exception occurred. The BigSql Scheduler service may not be running or the scheduler client request timeout may not be sufficient.

If you get the error,

1) You need to check whether the scheduler is running.

$BIGSQL_HOME/bin/bigsql status -scheduler

2) If the scheduler is running, then you may need to check the time taken for scanning metadata & registerQueryNew. To get the time, you need to check the /var/ibm/bigsql/logs/bigsql-sched-recurring-diag-info.log

[requestScanMetadata]

top (5) max elapsed times:

time= 4363; info= [TableSchema(schName:<schema>, tblName:<tablename>, impersonationID:bigsql)]

time= 2339; info= [TableSchema(schName:<schema>, tblName:<tablename>, impersonationID:user_tyre)]

time= 32; info= [TableSchema(schName:<schema>, tblName:<tablename>, impersonationID:bigsql)]

elapsed-time-range-in-millis and frequency-of-calls-in-that-range:

range= 0-10; freq= 0

range= 10-100; freq= 1

range= 100-1000; freq= 0

range= 1000-10000; freq= 2

range= 10000-100000; freq= 0

range= 100000-1000000; freq= 0

range= 1000000-9223372036854775807; freq= 0

[registerQueryNew]

top (5) max elapsed times:

time= 448892; info= schema.tablename;

time= 245079; info= schema.tablename;

time= 204996; info= schema.tablename;

time= 152660; info= schema.tablename;

time= 151576; info= schema.tablename;

elapsed-time-range-in-millis and frequency-of-calls-in-that-range:

range= 0-10; freq= 71

range= 10-100; freq= 18

range= 100-1000; freq= 16

range= 1000-10000; freq= 6

range= 10000-100000; freq= 13

range= 100000-1000000; freq= 9

range= 1000000-9223372036854775807; freq= 0

You need to check the max time taken during the time when query failed. In above example, the max time is 448892 millisecond ( 7.48 mins).

Check the timeout set for the property (scheduler.client.request.IUDEnd.timeout & scheduler.client.request.timeout) in /usr/ibmpacks/current/bigsql/bigsql/conf/bigsql-conf.xml.

The default values are scheduler.client.request.timeout = 360000 (6 Mins) & scheduler.client.request.IUDEnd.timeout = 600000 (10 Mins)

<property>

<name>scheduler.client.request.IUDEnd.timeout</name>

<value>600000</value>

<description>

Scheduler clients will wait for scheduler to respond for

these many milli-seconds before timing out during RPC for

finalizing Insert/Update/Delete.

For Inserting/Updating/Deleting very large dataset,

this many need to be adjusted.

</description>

</property>

<property>

<name>scheduler.client.request.timeout</name>

<value>3600000</value>

<description>

Scheduler clients will wait for scheduler to respond for

these many milli-seconds before timing out during any RPC

call other than finalizing Insert/Update/Delete.

For query over very large dataset, this many need to be adjusted.

</description>

</property>

As per the logs the query is taking 7.48 mins but the timeout we set in property is 6 mins. So we need to increase the timeout of property higher than the max timeout in the logs. You can change the timeout to 720000 (12 mins) and restart the BigSQL.

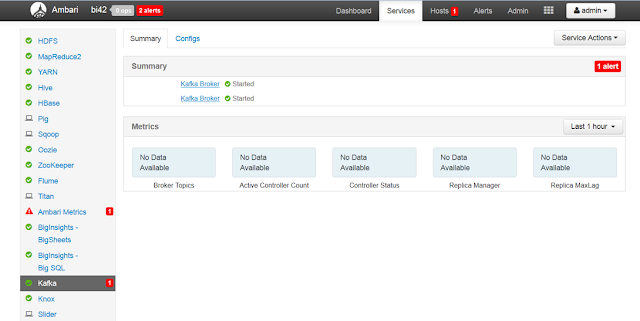

If you are using BigSQL 5.0.2 or above, you can do it from Ambari UI or you need to change it from /usr/ibmpacks/current/bigsql/bigsql/conf/bigsql-conf.xml